The 6th International Competition on Human Identification at a Distance (HID 2025)

Overview

Welcome to the 6th International Competition on Human Identification at a Distance (HID 2025)! The competition will be in conjunction with IJCB 2025.

The 6th International Competition on Human Identification at a Distance (HID 2025) addresses the critical challenge of video-based human identification in noisy environments, where subtle inter-person distinctions must be captured under complex real-world conditions. This competition provides participants with preprocessed human body silhouettes and reference source code to facilitate algorithmic design. The competition also employs an automated evaluation system through CodaLab. The benchmark is the SUSTech-Competition dataset, a large gait database with 859 unique subjects.

Awards

Generously sponsored by Watrix Technology, the competition offers a total prize pool of 19,000 CNY (approximately 2,660 USD) to the top six performing teams.

- First Prize (1 team): 10,000 CNY (~1,500 USD)

- Second Prize (2 teams): 3,000 CNY (~450 USD)

- Third Prize (3 teams): 1,000 CNY (~150 USD)

where CNY stands for Chinese Yuan.

Important Dates

The timeline for the competition is as follows.

- Competition starts: February 28, 2025

- Deadline of the 1st phase: May 6, 2025

- Deadline of the 2nd phase: May 16, 2025

- Competition results announcement: May 26, 2025

- Submitting competition summary paper: June 23, 2025

How to Join the Competition?

The competition is open to everyone. But the members from the teams of the organizers cannot join.

Click CodaLab HID 2025 to register and join the competition.

Note: Please use your institutional email to register for the competition, do NOT use gmail.com or qq.com, otherwise the registration will not be approved.

If you have any questions, please contact Dr. Jingzhe Ma <majz2020@mail.sustech.edu.cn> and CC to Prof. Shiqi Yu<yusq@sustech.edu.cn>.

If you have a WeChat account, you can scan Dr. Jingzhe Ma’s QR code. He can invite you to the WeChat group for this competition. It is optional. The notifications sent through emails will also be announced in that WeChat group.

Competition Sample Code

You should develop all the source code you need for the competition. You can use OpenGait to help you start your training quickly. There are some well-trained gait recognition models in the OpenGait repo.

You can find the HID 2025 code tutorials here for testing and submission, and here for preparing your dataset, writing config files, and training your model with OpenGait.

How to Achieve a Good Result

The organizers published summary papers on the previous competitions, HID 2023 and HID 2024, at the International Joint Conference on Biometrics in 2023 and 2024. The paper can be downloaded below:

- International Joint Conference on Biometrics in 2023

- S. Yu et al., “Human Identification at a Distance: Challenges, Methods and Results on HID 2023,” 2023 IEEE International Joint Conference on Biometrics (IJCB), Ljubljana, Slovenia, 2023, pp. 1-8, doi: 10.1109/IJCB57857.2023.10448952.

@INPROCEEDINGS{hid2023,

author={Yu, Shiqi and Wang, Chenye and Zhao, Yuwei and Wang, Li and Wang, Ming and Li, Qing and Li, Wenlong and Wang, Runsheng and Huang, Yongzhen and Wang, Liang and Makihara, Yasushi and Ahad, Md Atiqur Rahman},

booktitle={2023 IEEE International Joint Conference on Biometrics (IJCB)},

title={Human Identification at a Distance: Challenges, Methods and Results on HID 2023},

year={2023},

pages={1-8}}

- International Joint Conference on Biometrics in 2024

- S. Yu et al., “Human Identification at a Distance: Challenges, Methods and Results on the Competition HID 2024,” 2024 IEEE International Joint Conference on Biometrics (IJCB), Buffalo, NY, USA, 2024, pp. 1-8, doi: 10.1109/IJCB62174.2024.10744507.

@INPROCEEDINGS{hid2024,

author={Yu, Shiqi and Wu, Weiming and Hu, Jiacong and Wang, Zepeng and Wang, Jingjie and Zhang, Meng and Wang, Runsheng and Ni, Yunfei and Huang, Yongzhen and Wang, Liang and Rahman Ahad, Md Atiqur},

booktitle={2024 IEEE International Joint Conference on Biometrics (IJCB)},

title={Human Identification at a Distance: Challenges, Methods and Results on HID 2024},

year={2024},

pages={1-8}}

Dataset and Evaluation Protocol

Dataset

Test gallery data set and probe data set download options are provided below:

- Baidu Netdisk

- Gallery data. Password: hid6

- Probe data of phase 1. Password: hid6

- Probe data of phase 2. Password: hid6

- Google Drive

Since the accuracy in the HID 2022 competition is close to saturation, we switched to a more challenging dataset, SUSTech-Competition, since HID 2023. SUSTech-Competition was collected in the summer of 2022, under the approval of the Southern University of Science and Technology Institutional Review Board. It contains 859 subjects and many variations including clothing, carrying conditions, and view angles have been considered during the data collection. To reduce participants’ burden on data pre-processing, we will provide human body silhouettes. The silhouettes are obtained from the original videos by a deep person detector and a segmentation model provided by our sponsor, Watrix Technology.

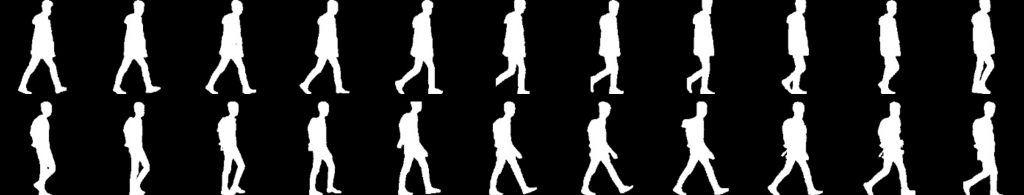

All silhouette images will be resized to a fixed size 128 x 128. We will not remove bad-quality silhouettes manually. As shown in the figure, the silhouettes are not of perfect quality. Some noises in real applications are involved. The noises make the competition more challenging. It also makes the competition a good simulation of real applications.

Started from HID 2023, we will not provide a training set to participants. The participants can use any other dataset, such as CASIA-B, OUMVLP, and CASIA-E etc., to train their algorithms.

Note: The samples from the test probe set, including HID 2023 and HID 2024 and all the test probe set samples provided in HID 2025, are strictly prohibited from being used in any way during the training phase.

The cross-domain challenge should be considered for achieving good results. The gallery set contains only one sequence from each subject. The labels of the sequences in the gallery set will be provided. The probe set contains 5 randomly selected sequences from each subject. In the first phase, only 10% of the probe set will be released to participants to avoid label hacking. The remaining 90% will be released in the second phase.

Evaluation protocol

The evaluation should be user-friendly and convenient for participants. It should also be fair and safe to be hacked. The detailed rules are as follows:

- To avoid the ID labels of the probe set being hacked by numerous submissions, we will limit the number of submissions each day to 2. Only one CodaLab ID is allowed for a team.

- The accuracy will be evaluated automatically at CodaLab. The ranking will be updated on the scoreboard accordingly.

- There will be about 68 days in the first phase. But only 10% of the probe samples will be taken for evaluation in the first phase.

- There will be only 10 days in the second phase. The remaining 90% of the probe sample is for evaluation. The data is different from that in the first phase.

- The top 10 teams in the final scoreboard need to send their programs to the organizers. The programs are being run to reproduce their results. The reproduced results should be consistent with the results shown in the CodaLab scoreboard. Otherwise, the results will not be considered.

Ethics

Privacy and human ethics are our concerns for the competition. Technologies should improve human life and not violate human rights.

The dataset for the competition was collected by the Southern University of Science and Technology, China in 2022. The data collection and usage have been approved by the Southern University of Science and Technology Institutional Review Board (The approval form is attached in the appendix). It contains 859 subjects and was not released to the public in the past. All subjects in the dataset signed agreements to acknowledge that the data could be used for research purposes only. When we release the data during the competition, we will also ask the participants to agree to use the data for research purposes only.

In order to avoid potential privacy issues, we will only provide the binary silhouettes of human bodies, not RGB frames, to participants. The human IDs are labeled as some digital numbers such as 1, 2, 3, … and not their real identification information. Besides, we will also not provide the gender, age, height and other similar information from subjects.

Performance metric

Rank 1 accuracy is for evaluating the methods from different teams. It is straightforward and easy to implement.

where, TP denotes the number of true positives, and N is the number of probe samples.

Organizers

Advisory Committee (Alphabetical order)

- Prof. Mark Nixon, University of Southampton, UK

- Prof. Tieniu Tan, Nanjing University, China; Institute of Automation, Chinese Academy of Sciences, China

- Prof. Yasushi Yagi, Osaka University, Japan

Organizing Committee (Alphabetical order)

- Prof. Md. Atiqur Rahman Ahad, University of East London, UK

- Prof. Yongzhen Huang, Beijing Normal University, China; Watrix Technology Co. Ltd, China

- Dr. Jingzhe Ma, Shenzhen Polytechnic University, China

- Prof. Manuel J Marin-Jimenez, University of Cordoba, Spain

- Prof. Liang Wang, Institute of Automation, Chinese Academy of Sciences, China

- Prof. Shiqi Yu, Southern University of Science and Technology, China

FAQ

Q: Can I use data outside of the training set to train my model?

A: Yes, you can. But you must describe what data you use and how to use it in the description of the method.

Q: How many members can my team have?

A: Teams may include up to 5 members, including supervisors (if applicable). Members can be from different institutions. But only one participant from each team can submit the result, otherwise, it will be considered an invalid result.

Q: Who cannot participate in the competition?

A: The members of the organizers’ research group cannot participate in the competition. The employees and interns at the sponsor company cannot participate in the competition.

Leaderboard

Coming Soon